A lot of organisations are currently converting their applications to SaaS. Unfortunately not much is written about “conversion & migration” (that I actually found useful), and I could have done with an article like this few months ago.

Objective of this article is to give you high level pragmatic overview of what you will need to think about, and do, when your company decides to convert / migrate your app to the Cloud.

This article will expose the unknown unknowns, force you to rethink your assumptions, ask you some tough provoking questions and offer some tips so that you can give your company better estimates and an idea of what involved.

Before we start, I would like to let you know that I was involved in the B2B SaaS conversion project, and migrated to Microsoft Azure. I will be drawing from my personal experience a lot, by doing so, hopefully I will be able to pass on some real world experience.

This article is broken into two parts:

- Business section is for executives and software architects, it introduces you to some of Cloud concepts, it’s suppose to make you think about the wider company, costs, why you are migrating and what’s going to be involved.

- Technical section is for software architects and developers, it’s suppose to make you think about how you can go about the migration and what technical details you will need to consider.

Business: Costs, Why and Impact.

1. Understand the business objectives

Converting your app to SaaS is not going to be cheap, it can take from few weeks to number of months (depending on the product size). So before you start working on this, understand why the business wants to migrate.

Few good reasons to migrate:

- Removal of existing data center (cost saving)

- International expansion (cost saving)

- A lot of redundant compute that is used only during peak times (cost saving)

- Cloud service provider provides additional services that can complement your product (innovation)

It’s vital that you understand the business objectives and deadlines, otherwise you will not be able to align your technical solution with the business requirements.

2. Understand the terminology

Here are some terms you need to get to know:

| Term |

Wikipedia Definition |

| Multi Tenancy |

The term “software multitenancy” refers to a software architecture in which a single instance of software runs on a server and serves multiple tenants. A tenant is a group of users who share a common access with specific privileges to the software instance. |

| IaaS (Infrastructure as a Service) |

“Infrastructure as a Service (IaaS) is a form of cloud computing that provides virtualized computing resources over the Internet. IaaS is one of three main categories of cloud computing services, alongside Software as a Service (SaaS) and Platform as a Service (PaaS).” |

| PaaS (Platform as a Service) |

“Platform as a service (PaaS) is a category of cloud computing services that provides a platform allowing customers to develop, run, and manage applications without the complexity of building and maintaining the infrastructure typically associated with developing and launching an app.” |

| SaaS (Software as a Service) |

Software as a service (SaaS; pronounced /sæs/) is a software licensing and delivery model in which software is licensed on a subscription basis and is centrally hosted. It is sometimes referred to as “on-demand software”. SaaS is typically accessed by users using a thin client via a web browser. |

Here is a picture that explains IaaS, PaaS and SaaS very well:

3. Migration Approaches

| Migration Approach |

Description |

| Lift and shift |

Take your existing services and just migrate it to the IaaS (VMs), and in the future redesign your application for the Cloud. |

| Multi tenancy |

Redesign your application so that it supports multi tenancy, however for now ignore other benefits. This migration approach might use IaaS with combination of PaaS. For example you might host your application in a VM, but use Azure SQL. |

| Cloud native |

Redesign your application completely so that it’s Cloud native, it’s has built in multi tenancy and uses PaaS services. For example you will use Azure Web Apps, Azure SQL and Azure Storage. |

What approach you are going to take will depend on skills within the organisation, and what if any, products that you already have in the Cloud and how much time you have before you need to hit the deadline.

I am not going to focus on lift and shift in this article as it’s just taking what you already have and moving it to the Cloud, strictly speaking that is not SaaS, it is “Hosted” on the Cloud.

4. Identify technology that you will need

Chances are your topology will be a combination of:

| Service |

|

Technology Required |

| Front-end |

ASP.NET MVC, Angular, etc |

IIS with .NET Framework |

| Back-end |

Java, .NET, Node.JS, etc |

Compute node that can host JVM, or WCF and can listen on a port |

| Persistence |

SQL Server, MySQL, etc |

SQL Server 2016, Azure Storage |

| Networking |

Network segregation |

Sub networks, VNET, Web.Config IP Security, Access Control List |

Understand what you actually need, don’t assume you need the same things. Challenge old assumptions, ask questions like:

- Do you really need all of that network segregation?

- Do you really need to have front-end and back-end hosted on different machines? By hosting more services on a single machine you can increase compute density and save money. Need to scale? Scale the whole thing horizontally.

- Do we really need to use relational data store for everything? What about NoSQL? By storing binary data in Azure Storage you will reduce Azure SQL compute (DTUs) which will save you a lot of money long term.

- In this new world, do you still really need to support different on-prem platforms? SQL Server and MySQL? How about Linux and Windows? By not doing this, you can save on testing, hosting costs, etc.

5. Scalability

The greatest thing about Cloud is scalability. You can scale effortlessly horizontally (if you architect your application correctly). How will your application respond to this? What is currently the bottleneck in your architecture? What will you need to scale? Back-end? Front-end? Both? What about persistence?

Find out where the bottlenecks are now, so that when you redesign your application you can focus on them. Ideally you should be able to scale anything in your architecture horizontally, however time and budget might not allow you to do this, so you should focus on the bottlenecks.

6. Service Level Agreements (SLA) & Disaster Recovery

Services on the Cloud go down often, and often it’s fairly random what goes down, please do take a look at this

Azure Status History.

The good news is that there is plenty of redundancy in the Cloud and you can just fail over or re-setup your services and you will be up and running again.

Something to think about:

- What kind of SLA you will need, 99.9%? Is that per month or a year?

- What kind of Recovery Point Objectives will you need to reach?

- What kind of Recovery Time Objectives

- will you need to reach?

- How much downtime did your prefered Cloud service provider has experienced recently? For example Azure had around 11 hours of downtime this year (2016).

- If you store some data in relational database, some in NoSQL, some on the queue, when your services all go down, how are you going to restore your backups?

- How will you handle data mismatch e.g. Azure SQL being out of sync with Azure Storage?

Higher SLA more likely you will need to move towards active-active architecture, but it’s complex, requires maintenance and lots of testing. Do you really need it? Will active-cold do?

7. Security & Operations

Security and Operations requirements are different on the Cloud. Tooling is not as mature, especially if you are looking to use PaaS services, and tooling that is available out of the box takes time to get used to. For example Azure

App Insights,

Security Center, etc.

Find out what security controls will need to be in place, find out what logs and telemetry ops team will need to do their job.

8. Runtime cost model

Start putting together runtime cost model for different Cloud service providers. At this stage you know very little about these services, however you should start estimating the runtime costs. Cloud service providers normally provider tools to help you with this, for example Azure provides

Azure Pricing Calculator.

9. Staff Training

Now that you have a rough idea what service provider you will choose, start looking into training courses. Training can be official e.g. staff can get

Cloud Platform MCSA certification, or it can be more unofficial. However, no matter what you do, I encourage you to give your staff time to play around with different services, put together few PoCs, read few books e.g.

Developing Microsoft Azure Solututions, watch some Azure videos e.g.

Azure Friday.

This training will require some time, if you haven’t got time, consider consultancy.

10. Consultancy

If you would like to get some help there are number of things you can do with Azure:

- Hire Azure migration consultants

- Contact “Microsoft Developer eXperience” team, they might be able to help

- you with migration by investing in your project (free consultation & PoCs).

- Sign up for Microsoft “Premier Support for Developers”, you will get a dedicated Application Development Manager who will be able to assist you and guide you and your team.

I strongly advise you to grow talent internally and not solely rely on consultants. Hybrid approach works best, get some consultancy and get them to coach your internal team.

Technical: How are you going to do it?

1. Multi tenancy data segregation

Hosted applications tend to be installed on a machine and used by one company, however SaaS applications are installed and used by lots of different companies. With sharing comes great responsibility, there are number of ways that you can handle multi tenancy data segregation:

- Shared Database, Shared Schema (Foreign Keys partition approach)

- Shared Database, Separate Schema

- Separate Database

What is my take? If your app is very simple (To Do List, Basic document store, etc), go for Foreign Key partition. If you are app is more complicated e.g. finance or medical system I would go for the separate database / separate persistence. Separate persistence will reduce the chance of data cross tenant data leak, make tenant deletion simpler and more importantly it will makes ops easier, you can upgrade their persistence individually, you can back them up more often if you need to, and most likely you will not need to worry about sharding. However, separate persistence approach is not simple to implement, it requires a lot of development.

2. Login Experience

Now that users access your application there are number of ways for them to access their

tenant area:

- Dedicated URL

- Recognise Possible Tenants On Login

On the Cloud user experience will need to change, because many businesses are using it

at the same time you need to somehow know who is who. There are number of ways you can do this:

Dedicated URL

You can give your tenants dedicated URL so that they can login into their app. For example you can give them access to: yourapp.com/customername or customername.yourapp.com.

However, this approach will require you to send out an email to the tenant, informing them

what their URL is. If they forget the URL they will end up contacting your support team.

Recognise Possible Tenants On Login

Tenant goes to yourapp.com and logs in. When they login they presented with possible tenants that they can access.

With this approach tenants don’t need to remember their URL, however you need to introduce extra step before they login and you need to scan all possible tenants to see if the logged in user exists. This is more work, not to mention, what if your customer wants to use AzureAD? How do you know which AzureAD should be used? Now you will need to introduce mapping tables, extra screens, etc.

3. Application state, is your application already stateless?

If you need your application to scale out then your compute instances should not keep any state in memory or disk. Your application needs to be stateless.

However, if temporarily you need to keep some basic state, like session state then you are in luck, Microsoft Azure allows you to use ARR (Application Request Routing) so that all client requests get forwarded to the same instance every time. This should be a temporary solution as you will end up overloading single instance as it will take a while for cookies to expire and spread the load to other instances.

4. What cloud services should you use?

This largely comes down to the security and performance requirements.

Azure Web Apps are great, however they don’t support VNETs, which means you can’t stop OSI level 3 traffic to Front-end and Back-end. However you can use Web.Config IP Security to restrict access to front-end, back-end and Azure SQL supports Access Control List (ACL). Azure Web Apps also don’t scale very well vertically, so if you have lots of heavy compute it might make more sense economically for you to use Service Fabric or Cloud Services so that you can scale vertically and horizontally.

However, Azure Web Apps require no maintenance, they are easy to use, they scale almost immediately and they are very cheap.

What is my take? Always go with PaaS services and avoid IaaS as much as you can. Let Azure do all of the operating system and service patching / maintenance. Go to IaaS only if you have no other choice.

5. Telemetry & Logging

If you are going to use pure PaaS services then most like you will not be able to use your traditional ops tools for telemetry. The good news is that ops tooling on the Cloud is getting better.

Azure has a tool called App Insights it allows you to:

- Store / view your application logs (info, warnings, exceptions, etc). You will need to change your logging appender to make this work.

- Analyse availability

- Analyse app dependencies

- Analyse performance

- Analyse failures

- Analyze CPU / RAM / IO (IaaS only)

Also there is a tool called Azure Security Center, it works very well if you have VMs in your subscription, it will guide you and give you best practices. It also has built in machine learning so it analyses your infrastructure and if it will see something unusual it will flag it up and and notify your ops team. It’s very handy.

6. Always test with many instances

You should be running your application with at least 2 compute instances. Last thing you want to happen is to come to the release date and scale out and find out that your application is not scaling. This means anything that can be scaled horizontally should be scaled so you are always testing.

7. Authentication

Multi tenancy makes authentication hard, it makes it even harder if your application is B2B. Why? Well because all businesses have different authentication requirements, they might have AD and they want to integrate it with your app and enable Single Sign On, they might be a hip start-up and they will want to use Facebook to login into your app. This should not be overlooked, you might need to find open source authentication server or use service such as AzureAD.

What is my take? Find an open source authentication server and integrate with it. Avoid AzureAD unless your customers pay for it. AzureAD is very expensive, basic tier cost around £0.60 per user and Premium 2 tier costs around £5.00 per user!

8. Global Config

Hosted applications tend to be installed, so they come with installers. These installers change app keys in the config. For example if your app integrates with Google Maps your installer might ask your customer to put in the Google Map app keys to enable Google Maps features. Now in the Cloud world this is very different, customers don’t care about this, they just want to login and use your software. So these keys are going to be the same for everyone, this means that most of these keys will need to be migrated out of the database and config files to App Settings (Environment variables).

9. Relational Database Upgrades

After all these years the hardest things to update are still databases. This is especially the case if you go for the database per tenant approach. You release your software, services are now running using the new version, now you decide to upgrade all database schemas, what if one of them fails? Do you roll back the entire release? When do you upgrade the databases? Do you ping every tenant and upgrade them? Do you do it when they login?

There are some tools that can help you with database upgrades:

These tools automatically patch your databases to the correct version level.

However how you roll back, hot fix and upgrade your tenants is still up to you and your organisation.

What is my take? Use tools like Flyway,

Evolutionary Database Design approach and roll forward only. Find a problem, fix it and release the hotfix.

10. Encryption

As you are storing your customers data in someone else’s data center chances are you will need to rereview your encryption strategy. Cloud providers are aware of this and to make your life easier they have released some features to assist you, for example here are few great encryption features from Azure:

Once again, you need to rereview your requirements and think about what will work for you and your organisation. You don’t want to encrypt everything using Always Encrypted because you will not be able to do partial searches and your application’s performance will be impacted.

Please be aware that Always Encrypted feature will most likely not prevent SQL Injection attack, and you might not be able to use some of the new features from Microsoft if you use this technology, for example Temporal Tables, they currently don’t support Always Encrypted.

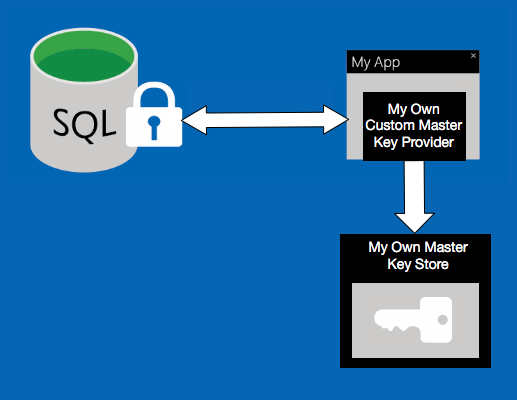

11. Key Store

If you are using a key store, you will need to update it to support multi tenancy.

12. Fault tolerance

There is a lot of noise out there about fault tolerance on the Cloud, you need to be aware

of these three things:

- Integration error handling

- Retry strategies

- Circuit Breaker

Integration Error Handling

I throughly recommend that you do integration error handling failure analysis.

If cache goes down, what happens? If Azure Service Bus goes down what will happen? It’s important that you design your app with failure in mind. So if cache goes down your app still works, it will just run a bit slower. If Azure Service Bus goes down, your app will continue to work but messages will not get processed.

Retry Strategy (Optional)

Retry strategy can help you with transient failure. In the early cloud days I’ve been told that these errors were common due to the infrastructure instability and noisy neighbour issues. These errors are a lot less common these days, however they still do happen. Good news is that Azure Storage SDK comes with retry strategy by default and you can use ReliableSqlConnection instead of SqlConnection to get the retry strategy when you connect to Azure SQL.

A custom retry policy was used to implement exponential backoff while also logging each

retry attempt. During the upload of nearly a million files averaging four mb in size around 1.8% of files required at least one try - Cloud Architecture Patterns - Using Microsoft Azure

You don’t have to implement this when you migrate, you can accept that some of the users might get a transient failure. As long as you have integration error handling in place and you keep your app transactionally consistent your users can just retry manually.

Circuit breaker (Optional)

13. Say goodbye to two phase commit

On the PaaS Cloud you can’t have two phase commmit, it’s a concious

CAP theorem trade off. This means no two phase commit for .NET or Java or whatever. This is not an Azure limitation.

Please take a second and think about how this will impact your application.

14. Point in time backups

Azure SQL comes with point in time backup, however Azure Storage doesn’t have point in time backup. So you either need to implement your own or use tool such as

CherrySafe.

15. Accept Technical Debt

During the migration you will find more and more things that you would like to improve.

However, the business reality is going to be that you will need to accept some technical debt. This is a good thing, you need to get product out there, see how it behaves and focus on the areas that actually need improvement. Make a list of things that you want to improve, get the most important things done before you ship, and during the subsequent releases just remove as much technical debt as you can.

What you think needs rewriting ASAP might change, as you convert your application you will find bigger more pressing things that you must improve, so don’t focus and commit to fixing the trivial things prematurely.

16. New Persistence Options (Optional)

As you migrate you should seriously think about persistence. Ask yourself the following questions:

- Do you really need to store everything in the relational database?

- Can you store binary files in the Azure Storage?

- What about JSON documents? Why not store them the DocumentDB?

This will increase migration complexity, so you might want to delay this and do this in the future. That’s understandable. But just so you know there are lots of reasons why you should do this.

Azure SQL is not cheap and DTUs are limited. Less you talk to the Azure SQL the better. Streaming binary files to and from Azure SQL is expensive, it takes up storage space and DTUs, so why do it? Azure Storage is very cheap and it comes with Read-Access Geo Redundant Storage, so incase of a DR you can failover.

17. Monolith, Service-based or Microservice Architecture (Optional)

If you are migrating I will say this, don’t attempt at the same time to migrate over to Microservices. Do one thing, just get your application to the Cloud, enable multi tenancy, give business some value. After you have achieved your conversion milestone I would consider breaking your app down into smaller deployment modules.

Why? Because it’s complex, here are just a few things that you will need to think about:

- Data Ownership - How do different modules share data? Who owns the actual data?

- Integration - How are you going to integrate module A and B?

- API to API communication?

- Queues?

- Database Integration?

- Testing, how are you going to test the integration? Are you going to automate it?

- Scalability - How are you going to scale different modules?

- Transactions - In the Cloud there is no two phase commit, how are going to work around this?

18. Infrastructure As Code (Optional)

Now that you are going to the Cloud you need to look after one more thing. Your infrastructure provisioning will now be a config file. Someone will need to maintain this.

If you are using Azure you will define your infrastructure using Azure Resource Manager and PowerShell.

This is optional because you could set up your infrastructure manually (I don’t recommend this).

19. Gradual Release (Optional)

If you deploy your SaaS app and something goes wrong you will not want to take all of your customers down at the same time. This means you should consider having several installations. For example, you can have different URLs for different installations app.yourdomain.com and app2.yourapp.com. So when you deploy to app.yourdomain.com, if all goes well then you can promote your app to app2.yourapp.com. This is optional because you could just deploy out of hours to app.yourapp.com.

20. Deployment Pipeline (Optional)

You will need a

deployment pipeline if you want to have confidence in your deployments. Don’t underestimate this step, depending on what services you are going to use and how complex your pipeline is, it can take weeks to set this up. Chances are you will need to change your config files, transform them for different installations, tokenize them. Deploy to staging slots and VIP switch the production, add error handling, establish promotion process, etc.

There are plenty of tools that can help you with this, I’ve been using Visual Studio Team Services to orchestrate builds and deployments.

21. Avoid Vendor Lock In (Optional)

Build your application in a such way that you can migrate with relative ease to another cloud provider. I know that no matter what you do, it will not be an easy journey, however, if you actually think about it and design with it in mind, it might take 1-3 months to migrate from Azure to AWS and not 1 year. How? When you redesign your application abstract infrastructure components out so there are no direct dependencies on Azure and Azure SDKs.